I was a Cursor agent power user for months. I even wrote the Cursor tips post that thousands of developers reference every week.

Then Claude Code came out. That became my go-to.

And now my go-to has changed, again. I didn't want it to happen, but let me explain why it.

Let's compare the agents, features, pricing and user experience.

All of these products are converging. Cursor’s latest agent is pretty similar to Claude Code’s latest agents, which is pretty similar to Codex’s agent.

Historically, Cursor set a lot of the foundation. Claude Code made improvements. Cursor copied useful things like to-do lists and better diff formats, and Codex adopted a lot of those too.

Codex is so similar to Claude Code that I honestly wonder if they trained off Claude Code’s outputs as well.

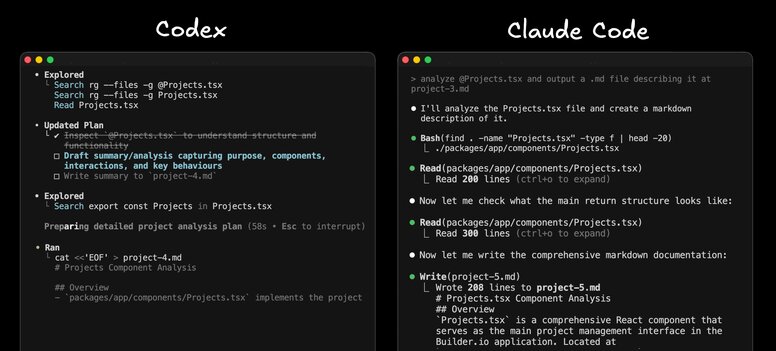

Small behavioral differences I notice:

- Codex tends to reason a bit longer, but its visible tokens-per-second output feels faster.

- Claude Code tends to reason less, but its visible output tokens come a bit slower.

- Inside Cursor, switching models changes the feel along the same lines: GPT-5 spends longer reasoning, Sonnet spends less time reasoning and more time outputting code, though the code comes out slightly slower, especially if you use Opus.

Ultimately, the agents are comparable. If you prefer Cursor or Claude Code or Codex, I respect it. I have a slight preference for Codex or Claude Code, mostly because the company that builds the tool also trains the models and seems to optimize the end-to-end loop.

Winner: Tie

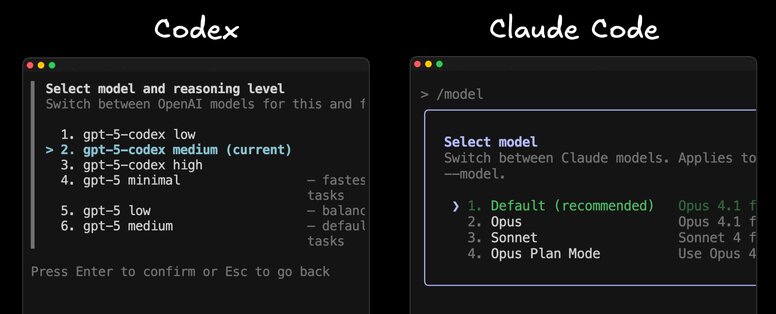

I have become pretty fond of the GPT-5 Codex model. It has improved at knowing how long to reason for different kinds of tasks. Over-reasoning on basic tasks is annoying.

Codex also lets me pick low, medium, high, or even minimal reasoning for super fast runs. I like these options more than having just two model choices in Claude Code. Cursor on the other hand has a lot of options, which is cool in theory, but can be a bit overwhelming in practice.

I like that the same company making the tool is training the models as they should know how to use it best and be able to give me the best price as there is no middleman that also needs a margin.

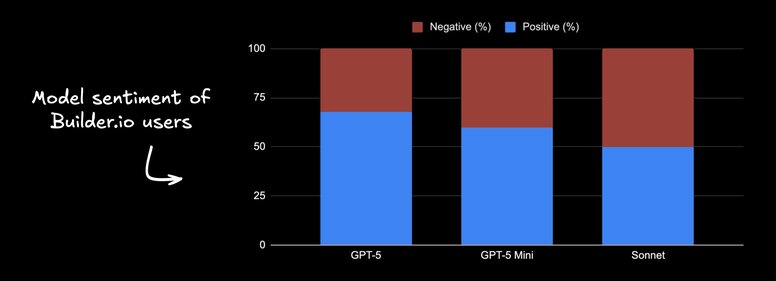

When we measured sentiment of Builder.io users using GPT-5, GPT-5 Mini, and Claude Sonnet, they rated GPT-5 40% higher on average.

Ultimately, different people prefer different models, so we'll just call this a tie.

Winner: Tie

Codex comes with standard ChatGPT plans. Claude Code comes with standard Claude plans.

On the surface, pricing tiers look similar: free, the roughly twenty-dollar tier, and the higher one hundred to two hundred tiers.

The key thing: GPT-5 is significantly more efficient under the hood than Claude Sonnet, and especially Opus. In recent production usage, quality feels comparable by most anecdotes and public benchmarks, but GPT-5 costs roughly half of Sonnet, and closer to a tenth of Opus, which means Codex can offer more usage for less.

Providers are not always explicit about exact request and token counts per plan, but in my experience Codex seems more generous.

Many more people can live comfortably on the 20-dollar Codex plan than on Claude’s 17-dollar plan, where limits get hit quickly. Even on the 100 and 200 tiers for Claude, heavy users still bump ceilings. With Codex Pro, I almost never hear about users hitting limits.

Also important to note that these are not just “coding plans.” You also get ChatGPT or Claude Chat. With ChatGPT you also get one of the best image generation models and video generation models, plus generally more polished products like the ChatGPT desktop app I use daily.

Claude’s desktop app feels slower and more like a basic Electron wrapper. Claude does have better MCP integrations with many one-click connectors. Day to day though, I am a ChatGPT user.

Given that the number one complaint I hear about coding agents is running out of credits, Codex has a real edge.

Winner: Codex

Codex recognizes a git-tracked repo and is permissive by default. Nice.

Claude Code’s permission system drives me crazy, and I routinely launch it with --dangerously-skip-permissions . It is an unnecessary risk, but the workflow friction is high and settings do not persist.

Terminal UIs are fine in both. Claude Code’s terminal UI is a touch nicer and clearly more mature, and you can have more control over permissions ultimately.

So while I think this is pretty neck and neck, Codex generally feels less mature and more basic in general, so I give the edge to Claude Code.

Winner: Claude Code

Claude Code has more features: sub-agents, custom hooks, lots of configuration. For a deep dive on a bunch of Claude Code features, you can see my best tips here. Cursor has a good bit of features as well (see my Cursor tips here), but Codex is the most limited.

Claude Code has all the slash commands

Where Codex does shine, though, is that it’s open source, so you can customize it any way you like or learn from it to develop your own agent.

But here is my honest take. Cursor once asked me what features they should add to win me back from Claude Code. I had learned from Claude Code that I do not care about features. I want the best agent, clear prompts, and reliable delivery. So I do not miss features when I do not have them. I need an agent and a good instructions file. That is it.

But all that said, if you want a lot of features (including some that are definitely pretty useful), Claude wins.

Winner: Claude Code

I have a whole separate video and post on writing a good Agents.md. One thing that annoys me about Claude Code is it does not support the Agents.md standard, only Claude.md.

Tools like Cursor, Codex, and Builder.io all support Agents.md. It is annoying to maintain a separate file for Claude when everything else respects the standard.

Winner: Codex

This is the main reason I prefer Codex.

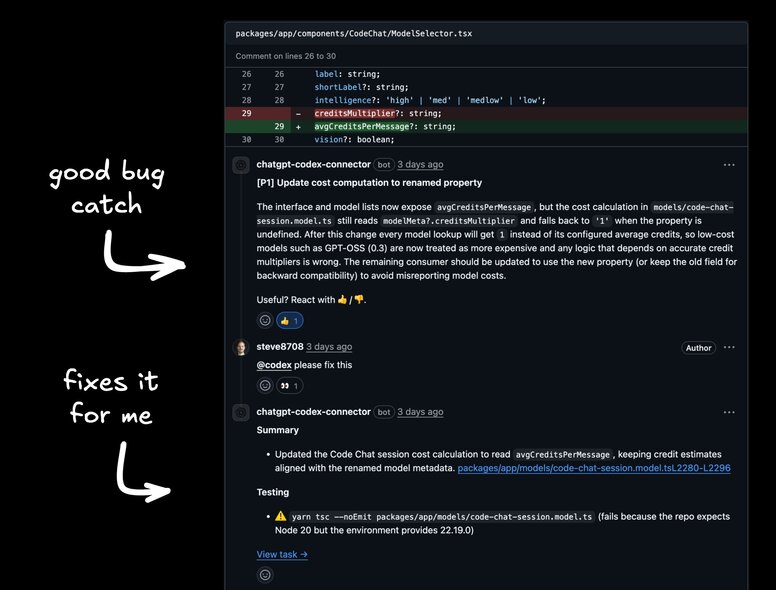

We tried Claude Code’s GitHub integration briefly. The Builder.io dev team thought it sucked. Reviews were verbose without catching obvious bugs. You could not comment and ask it to fix things in a useful way. It just did not deliver value.

Codex’s GitHub app has been the opposite. Install it, turn on auto code review per repo, and it actually finds legitimate, hard-to-spot bugs. It comments inline, you can ask it to fix issues, it works in the background, and it lets you review and update the PR right there, then merge.

Crucially, the feel matches my terminal experience. The prompts that work in CLI work from the GitHub UI. Same model, same configuration, same behaviors. That consistency matters.

Runner-up here is Cursor’s Bugbot. It finds good bugs and offers useful “fix in web” or “fix in Cursor” paths. You cannot really go wrong with either. I still prefer Codex for the pricing, model integration, and consistency with my CLI flow.

Winner: Codex

We just recently integrated Codex into Builder.io, which solves the one gripe I have with both of these options - that they have no real UI. If you need to iterate on visuals with AI, you can do it quickly here and include non-developers in the loop.

In 2025, I do not like handoffs. Our designers jump into Builder.io and use Codex to update sites and apps through prompting and a Figma-like visual editor. When they are ready, they send PRs. We review and merge.

Best part: everyone builds on the same foundation and codebase, using the same models and the same Agents.md. Designers, PMs, and engineers all align.

My personal winner right now is Codex. I use it daily. I like it a lot. The GitHub integration is excellent, the pricing and limits are favorable, the model options fit how I work, and the end-to-end consistency matters.

But to be completely honest, these days you really can’t go wrong with any of these options. If you prefer Claude Code or Cursor, I completely respect that.

Have you tried the Codex CLI or background agents and PR bot? How did it go for you? Drop your experience and tips in the comments.

Builder.io visually edits code, uses your design system, and sends pull requests.

Builder.io visually edits code, uses your design system, and sends pull requests.

Connect a Repo

Connect a Repo